- Docker Tutorial

- Docker - Home

- Docker - Overview

- Docker - Installing on Linux

- Docker - Installation

- Docker - Hub

- Docker - Images

- Docker - Containers

- Docker - Registries

- Docker - Compose

- Docker - Working With Containers

- Docker - Architecture

- Docker - Container & Hosts

- Docker - Configuration

- Docker - Containers & Shells

- Docker - Dockerfile

- Docker - Building Files

- Docker - Public Repositories

- Docker - Managing Ports

- Docker - Web Server

- Docker - Commands

- Docker - Container Linking

- Docker - Data Storage

- Docker - Networking

- Docker - Toolbox

- Docker - Cloud

- Docker - Logging

- Docker - Continuous Integration

- Docker - Kubernetes Architecture

- Docker - Working of Kubernetes

- Docker Setting Services

- Docker - Setting Node.js

- Docker - Setting MongoDB

- Docker - Setting NGINX

- Docker - Setting ASP.Net

- Docker Setting - Python

- Docker Setting - Java

- Docker Setting - Redis

- Docker Setting - Alpine

- Docker Setting - BusyBox

- Docker Useful Resources

- Docker - Quick Guide

- Docker - Useful Resources

- Docker - Discussion

Docker - Overview

Currently, Docker accounts for over 32 percent market share of the containerization technologies market, and this number is only expected to grow. In general, any containerization software allows you to run without launching an entire virtual machine.

Docker makes repetitive and time-consuming configuration tasks redundant. This allows for quick and efficient development of applications both on desktop and cloud environments. However, to get comfortable with Docker, it’s important to get a clear understanding of its underlying architecture and other underpinnings.

In this chapter, let’s explore the overview of Docker and understand how various components of Docker work and interact with each other.

What is Docker?

Docker is an open-source platform for developing, delivering, and running applications. It makes it easier to detach applications from infrastructure, which guarantees quick software delivery. Docker shortens the time between code creation and deployment by coordinating infrastructure management with application processing.

Applications are packaged and run inside what are known as containers which are loosely isolated environments in the Docker ecosystem. Because of this isolation, more containers can run concurrently on a single host, improving security. As they are lightweight, containers eliminate the need for host setups by encapsulating all requirements for application execution. Since containers are constant across shared environments, collaboration is smooth.

Docker provides us with comprehensive tooling and a platform for managing the container lifecycle −

- You can develop applications and support their components using containers.

- You can use containers as the distribution and testing unit for all your applications.

- Docker allows you to deploy applications into all environments seamlessly and consistently, whether on local data centers, cloud platforms, or hybrid infrastructures.

Why is Docker Used?

Rapid Application Development and Delivery

Docker speeds up application development cycles by providing standardized environments in the form of local containers. These containers are integral to CI/CD workflows and they ensure fast and consistent application delivery.

Consider the following example scenario −

- The developers in your team write programs in their local system. They can share their work with their teammates using Docker containers.

- Then, they can use Docker to deploy their applications into a test environment where they can run automated or manual tests.

- If a bug is found, they can fix it in the development environment, verify the build, and redeploy it to the test environment for further testing.

- After the testing is done, deploying the application to the production environment and getting the feature to the customer is as simple as pushing the updated image to the production environment.

Responsive Deployment and Scaling

Since Docker is a container-based platform, it facilitates highly portable workloads. This allows you to run applications seamlessly across various environments. Its portability and lightweight nature allow for dynamic workload management. Subsequently, businesses can scale applications in real time as per demand.

Maximizing Hardware Utilization

Docker is a cost-effective alternative to traditional virtual machines. This enables higher server capacity utilization. It allows you to create high-density environments and perform smaller deployments. This allows businesses to achieve more with limited resources.

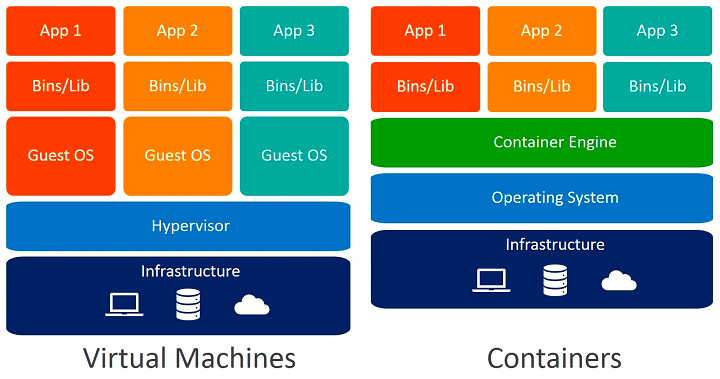

Docker Containers vs Virtual Machines

Virtual machines (VMs) and Docker containers are two widely used technologies in modern computing environments, although they have different uses and benefits. Making an informed choice on which technology to choose for a given use case requires an understanding of their differences.

Architecture

Docker Containers − Docker containers are lightweight and portable, and they share the host OS kernel. They run on top of the host OS and encapsulate the application and its dependencies.

Virtual Machines − On the other hand, Virtual Machines imitate full-fledged hardware, including the guest OS, on top of a hypervisor. Each VM runs its own OS instance which is independent of the host OS.

Resource Efficiency

Docker Containers − In terms of resource utilization, Docker Containers are highly efficient since they share the host OS kernel and require fewer resources compared to VMs.

Virtual Machines − VMs consume more resources since they need to imitate an entire operating system, including memory, disk space, and CPU.

Isolation

Docker Containers − Containers provide process-level isolation. This means that they share the same OS kernel but have separate filesystems and networking. This is achieved through namespaces and control groups.

Virtual Machines − Comparatively, VMs offer stronger isolation since each VM runs its kernel and has its dedicated resources. Hence, VMs are more secure but also heavier.

Portability

Docker Containers − As long as Docker is installed in an environment, Containers can run consistently across different environments, development or production. This makes them highly portable.

Virtual Machines − VMs are less flexible compared to containers due to differences in underlying hardware and hypervisor configurations. However, they can be portable to some extent through disk images.

Startup Time

Docker Containers − Containers spin up almost instantly since they utilize the host OS kernel. Hence, they are best suitable for microservices architectures and rapid scaling.

Virtual Machines − VMs typically take longer to start because they need to boot an entire OS. This results in slower startup times compared to containers.

Use Cases

Docker Containers − Docker Containers are best suited for microservices architectures, CI/CD pipelines, and applications that require rapid deployment and scaling.

Virtual Machines − VMs are preferred for running legacy applications that have strict security requirements where strong isolation is necessary.

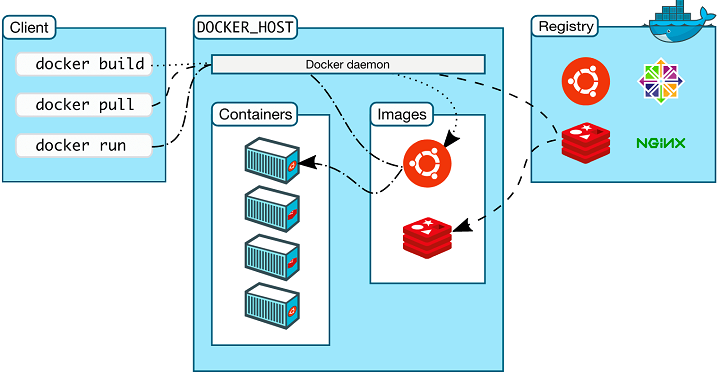

Docker Architecture

Docker uses a client-server architecture. The Docker client communicates with the Docker daemon, which builds, manages, and distributes your Docker containers. The Docker daemon does all the heavy lifting.

A Docker client can also be connected to a remote Docker daemon, or the daemon and client can operate on the same machine. They communicate over a REST API, over UNIX sockets, or a network interface.

Docker Daemon

The Docker daemon also known as dockerd manages the Docker objects like containers, images, volumes, networks, etc. It listens to the Docker API requests for this. To manage Docker services, it can communicate with other daemons as well.

Docker Client

To interact with Docker, most of the users use Docker clients such as CLI. In the command line, when you run Docker commands like Docker run, it sends the command to the dockerd to execute. The Docker client can communicate with multiple daemons.

Docker Desktop

Docker desktop is an easy way to access Docker. It’s an application that can run on Windows, Mac, as well as Linux. It allows you to perform all the Docker-related functionalities. Docker Desktop comes with daemon, client, compose, Kubernetes, and much more packed into it.

Docker Registries

A Docker registry has Docker images stored in it. The most popular public Docker registry is the Docker Hub which anyone can use. By default, Docker looks for images on Docker Hub.

When you execute a docker pull or run command, the dockerd pulls the required images from the registry. The same goes for the Docker push command as well.

Docker Objects

Docker has images, containers, networks, volumes, plugins, and other objects associated with it that you will use throughout the Docker journey. Let’s look at a brief overview of some of those objects.

Images

An image is a read-only template that contains Docker container creation instructions. An image is usually inherited from another image with some additional modifications. For example, you can create a Docker image built on top of the Ubuntu base image, and on top of that, you have an Apache web server, your application, as well as some configurations specific to your application.

You can create your own image or you can use the ones created by others from registries. To build customized images, you can use Dockerfile with all the instructions and steps required to build the image. An instruction in a Dockerfile is an image layer on top of the base image.

On rebuilding a Dockerfile, only the layers associated with those instructions that have changed are rebuilt. That’s what makes Docker images so lightweight, fast, and small.

Containers

A Docker container is a running instance of a Docker image. Docker allows you to create, run, kill, move, or delete a container. A Docker container is based on the associated image and the configurations provided when you start the container. When you stop or remove a container, changes that were not persisted to persistent storage such as volumes, etc. would be lost.

An example command to create a Docker container is −

docker run -i -t ubuntu /bin/bash

The following happens when you run this command −

- Docker pulls the Ubuntu image from Dockerhub if you haven’t already pulled it before in your local by internally executing the Docker pull command.

- It then creates a new container by internally executing the Docker create command.

- As a final layer, it allocates a read-write filesystem to the container. It also creates a default network interface.

- Once the container is started, it executes the /bin/bash command. The -i and -t flags run the container interactively and attach a terminal for you to run further commands.

Networks

Containers can communicate with each other thanks to Docker networks. Although containers are separated by default, you can use Docker to build virtual networks that let containers safely communicate with one another. These networks can range in complexity from simple to complex, with different driver options providing varied functionalities.

For basic connectivity, you can utilize the default bridge network or create customized networks for your apps. Building multi-container applications where services need to communicate with one another is made simple by Docker networks.

Storage

Conventional applications depend on the storage system that powers the host. With volumes, Docker provides a more adaptable method. Directories that store data outside of the container itself are called volumes. This makes sure your data is safe whether the container is stopped or restored.

For persistent storage requirements, you can bind-mount particular directories from the host computer or construct named volumes to manage application data. Docker volumes make data management easier and it ensures permanence by separating your application's data from the container lifecycle.

Conclusion

In conclusion, companies looking to simplify application development, deployment, and management will find that Docker's containerization technology presents a strong option.

Docker's methodology accelerates development cycles, facilitates scalability and portability, and maximizes the use of hardware resources. Businesses can increase the agility, efficacy, and cost-effectiveness of their software delivery processes by utilizing Docker containers.

Frequently Asked Questions

Q1. What is the core concept of Docker?

Containerization is the fundamental idea behind Docker. It compiles your program and all of its dependencies into a container, which is a self-contained entity. This container operates in isolation from other containers, guaranteeing consistent behavior in different environments. Consider it as a pre-configured box that comes with everything your application needs to function properly, wherever it is.

Q2. What is Docker best used for?

Docker is a great tool for managing, deploying, and developing applications more quickly. Its lightweight containers facilitate easier and faster construction.

Additionally, they support applications' easy scaling and mobility in a variety of contexts. Furthermore, by allowing the sharing of containerized apps, Docker streamlines teamwork.

Q3. What is the lifecycle of a Docker?

The lifespan of a Docker container begins with the creation of an image, which serves as a blueprint. Next, instances of the container are started using this image.

Containers can be started, stopped, paused, and restarted as needed. Lastly, once they are no longer needed, containers can be removed.

Q4. Is Docker a PaaS or IAAS?

Docker is not an infrastructure-as-a-service (IaaS) or platform-as-a-service (PaaS). It focuses on containerization, distinctive application packaging, and running technology.

IaaS delivers virtualized computer resources, but PaaS offers a full development and deployment environment. Docker works nicely with IaaS and PaaS solutions alike.

Q5. What is a Dockerfile?

A Dockerfile is a text document that has instructions on how to create a Docker image. It specifies the libraries, application code, and operating system that must be present in the image. Consider it a cookbook that instructs Docker on precisely the components and actions to do in order to produce a working container image for your application.

To Continue Learning Please Login